Our Scalable Kubernetes Service (SKS) can be used for a wide range of applications. Some of them use APIs or databases from external services. In the modern world, authentication against such services is done securely using TLS. However, some more old-fashioned systems and services require whitelisting using hard-coded and fixed IP addresses.

In its nature, Kubernetes is highly-available. Applications (in their containers) are spread across multiple computing nodes. When a cluster for example scales up, a new node is booted and new replicas of containers are started. This makes it unpredictable which IP address(es) an application will get or when it’s about to change.

To overcome this problem, we will create a simple NAT gateway with which we get a static outbound IP-Address.

Requirements:

- An SKS Kubernetes cluster

- Kubectl

- Access via the CLI or to the Exoscale UI

In this article the Exoscale CLI is primarily used.

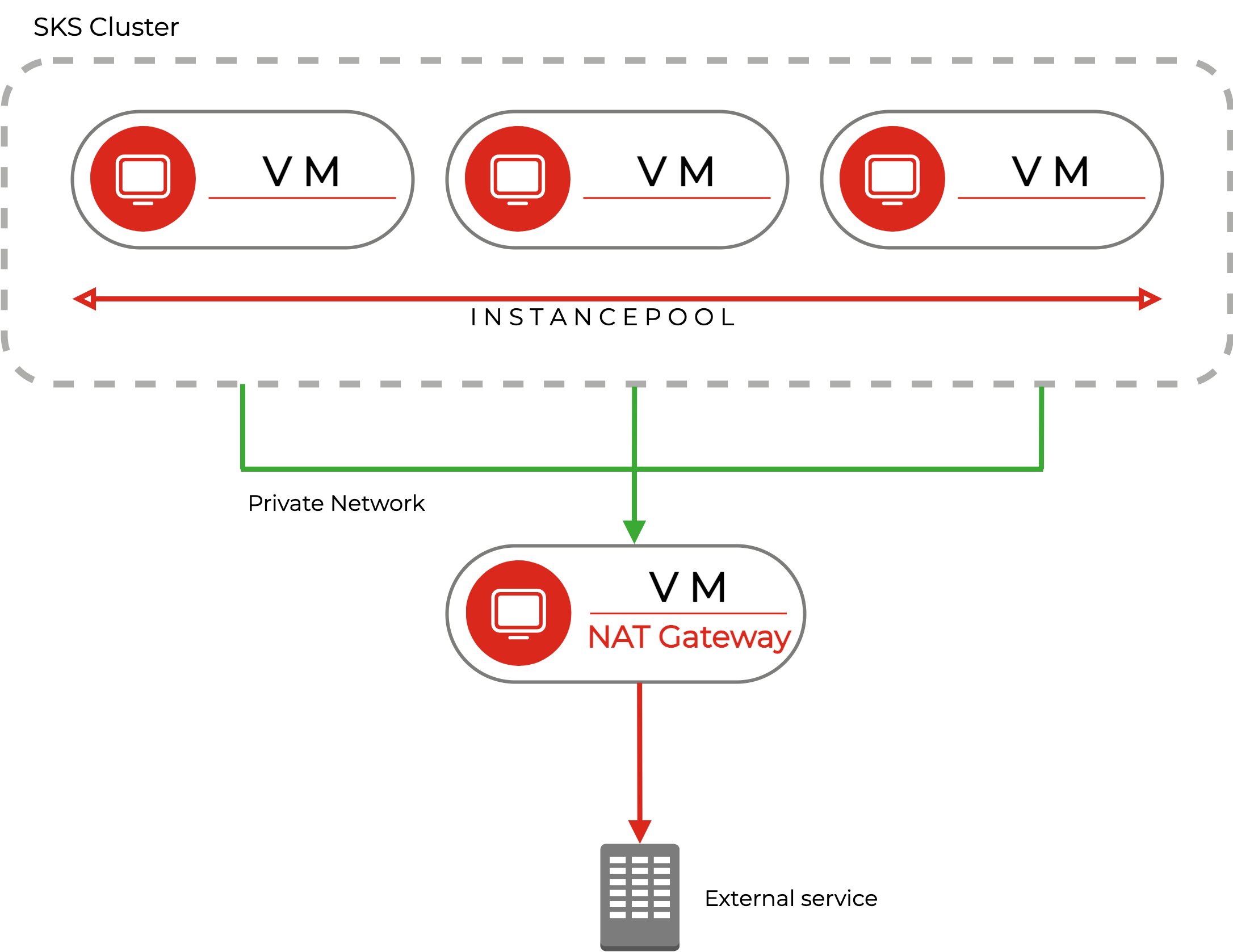

Architecture

First, let’s have a look at the architecture we want to achieve. We will attach the nodes of a Kubernetes Cluster to a private network and spin up a separate virtual machine acting as gateway. This gateway is attached to the same private network as the Kubernetes Cluster. This enables us to create a Kubernetes DaemonSet which allows routing traffic to specific IP addresses over the gateway.

Setting up the private network

Because, like mentioned above, it is impossible to predict which or how many nodes we will have after a scale up, SKS clusters require a managed network to assign (private) IP addresses automatically. A managed private network is just a Layer 2 network with a simple DHCP server.

In this example, a new private network called private-cluster-network is created and it will automatically assign IPs in the range of 10.0.0.150 to 10.0.0.250. The remaining IPs of the subnet can be used for manual assignments.

exo compute private-network create private-cluster-network \

--description "Private Network with a NAT Gateway" \

--netmask 255.255.255.0 \

--start-ip 10.0.0.150 \

--end-ip 10.0.0.250

Next, we will create a new node pool for our SKS cluster with our private network attached:

exo compute sks nodepool add MY-SKS-CLUSTER workernodes \

--description "SKS Worker nodes" \

--instance-prefix worker \

--instance-type standard.medium \

--security-group sks-security-group \

--private-network private-cluster-network

Note that you most likely need a security group for SKS as explained in our SKS Quick Start Guide.

Instead of creating a new node pool you can also attach the private network to an existing one:

exo compute sks nodepool update MY-SKS-CLUSTER NODEPOOLNAME \

--private-network private-cluster-network

You then either need to also add it to every single instance of the pool, or cycle the node pool.

Setting up the gateway instance

Next, we will create a virtual machine acting as a gateway. External services will see its public IP in the end.

But first, we need to write a cloud-init script for automation. Cloud-init is used to initialize an instance on boot. We want to achieve three things:

- Upgrade all packages

- Enable the private network interface, and set an internal IP (this example uses 10.0.0.1)

- Enable masquerading from the private network to enable routed traffic reaching the internet

- Enable IPv4 routing

Take a look at this cloud-init file:

#cloud-config

package_update: true

package_upgrade: true

write_files:

- path: /etc/netplan/eth1.yaml

permissions: 0644

owner: root

content: |

network:

version: 2

ethernets:

eth1:

addresses:

- 10.0.0.1/24

- path: /etc/systemd/system/config-iptables.service

permissions: 0644

owner: root

content: |

[Unit]

Description=Configures iptable rules

After=network.target

[Service]

Type=oneshot

RemainAfterExit=true

ExecStart=/sbin/iptables -t nat -A POSTROUTING -s 10.0.0.0/24 -o eth0 -j MASQUERADE

[Install]

WantedBy=multi-user.target

- path: /etc/sysctl.d/50-ip_forward.conf

permissions: 0644

owner: root

content: |

net.ipv4.ip_forward=1

runcmd:

- [ netplan, apply ]

- systemctl enable config-iptables.service --now

- sysctl -p /etc/sysctl.d/50-ip_forward.conf

By creating and applying the netplan plan, the private network is enabled. As iptable rules are not permanent by default, we will use a simple systemd file for creating the SNAT/Masquerading rule. IPv4 routing is enabled by creating and applying a corresponding sysctl file.

When you create a Virtual Machine via the Portal, you can use the script in the User-Data field.

In this case, we use the CLI:

exo compute instance create \

--cloud-init /Path/to/cloud-init.yaml \

--disk-size 10 \

--ssh-key your-ssh-keyname \

--security-group default \

gateway-test

This will create an instance with Linux Ubuntu 20.04 LTS 64-bit as of September 2022. The security group does not require any specific rules for the gateway functionality. But you might want to enable SSH from your IP address for debugging purposes.

We will attach the private network afterward, this allows us to specify an IP (not a requirement, as we configured it as static IP, however, the IP will be shown in the Portal this way):

exo compute instance private-network attach --ip 10.0.0.1 gateway-test private-cluster-network

Setting up a route in SKS

The cluster is now together with the gateway instance in a private network. Thus, the gateway has the ability to NAT traffic originated by the privnet. The only thing missing is a route that defines which traffic should flow through the gateway.

For that, we will use a Kubernetes DaemonSet. It will deploy an elevated priviledge container on every node, applying an iptables rule for a target IP to be routed over the gateway (10.0.0.1). In the example below, the IP 203.0.113.10/32 is re-routed. You should specify here the IP (or subnet) of the service which requires filtering.

The container will apply the rules and then idle.

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: add-static-route

namespace: default

spec:

selector:

matchLabels:

name: add-static-route

template:

metadata:

name: add-static-route

labels:

name: add-static-route

spec:

hostNetwork: true

containers:

- image: alpine:3.15

command: [ "/bin/sh", "-c" ]

args:

- >

ROUTE="203.0.113.10/32"

GW="10.0.0.1"

while

echo "Check for the interface and add the route if needed"

ifconfig eth1 > /dev/null 2>&1

if [ $? -eq 0 ]

then

ip r | grep "${ROUTE} dev eth1"

if [ $? -ne 0 ]

then

/sbin/route add -net ${ROUTE} gw ${GW}

else

ip r |grep "${ROUTE} via ${GW} dev eth1"

if [ $? -ne 0 ]

then

/sbin/route add -net ${ROUTE} gw ${GW}

fi

fi

/bin/sleep 604800

else

/bin/sleep 60

fi

do :; done

while true; do sleep 60; done

imagePullPolicy: IfNotPresent

name: ubuntu

securityContext:

privileged: true

capabilities:

add:

- NET_ADMIN

restartPolicy: Always

You now just need to apply the script with kubectl apply -f manifest.yaml.

Using a busybox-pod and traceroute to check it, the gateway should show up as a hop:

kubectl run -i --tty busybox --rm --image=busybox -- sh

traceroute 203.0.113.10

Your NAT gateway is now ready!

Conclusion

Traffic to the specified destination is now routed through the gateway. For that we just used a normal Linux instance, while not fully optimized for NAT traffic, it is suitable in most of the simple cases. Especially as instances in Exoscale benefit from up to 10 gigabit per second of bandwidth. Based on this architecture you can consider automating everything by using Terraform. Going ahead, you can even achieve high availability by using multiple gateways. Naturally, you can also use such kinds of setups when using pure Instance Pools without SKS.