Exoscale SKS (Scalable-Kubernetes-Service) enables you to easily scale your application and keep it high-available.

However, let’s say you run your own database inside the cluster: What about storage?

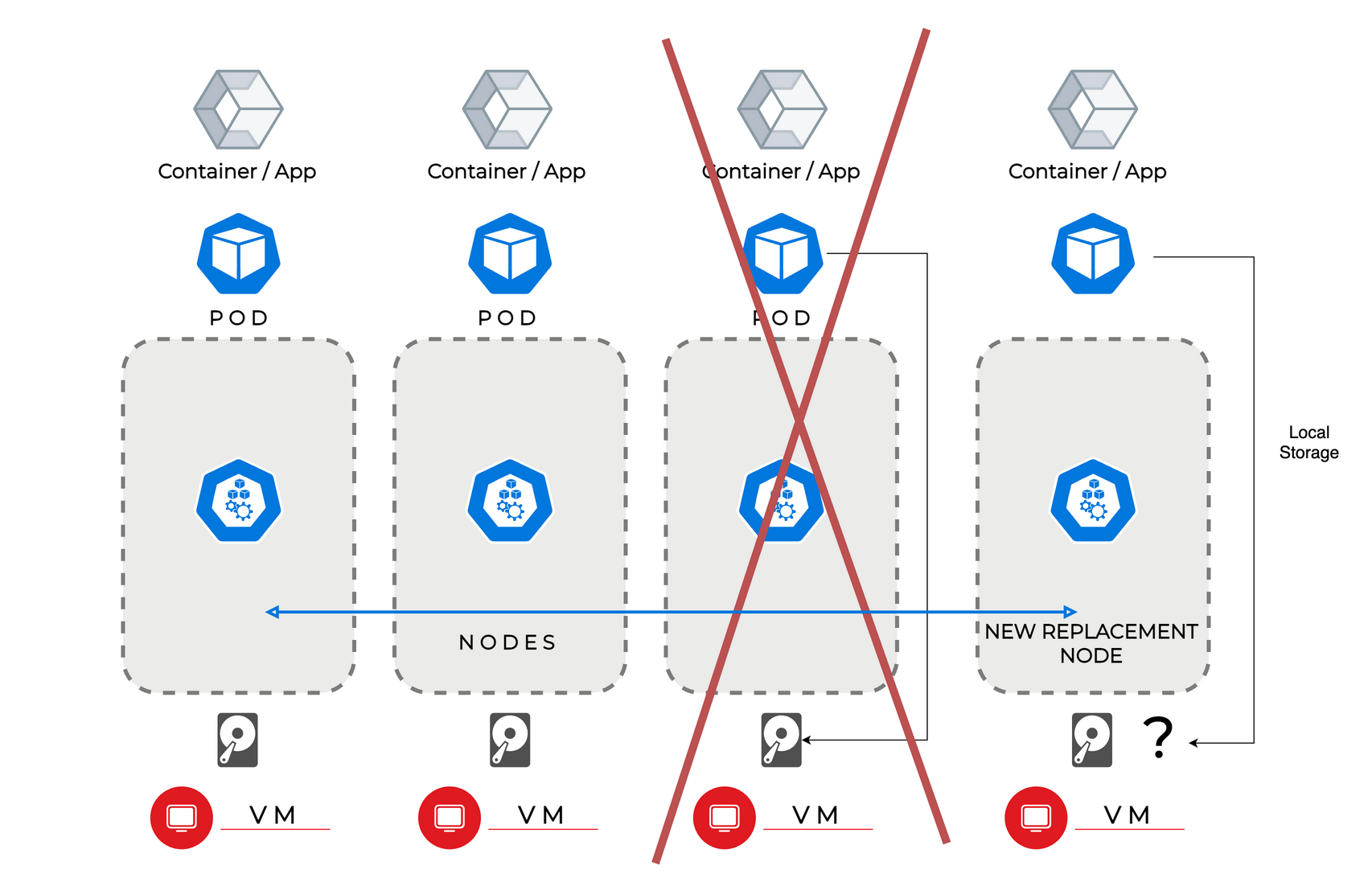

Every node inside the Kubernetes cluster comes with a local disk. That means in theory, you can simply use this storage to save the data of your Pod (aka. a Container in a non Kubernetes world) - as depicted in the next picture.

But what happens when the Pod or even the whole node fails? A failed Pod will result in Kubernetes rescheduling this Pod either on the same Node (considering it’s still healthy) or some other Node. But when you save the data locally, it will be only available on the specific Node where the data was saved. That means a Pod that is rescheduled on another Node is unable to access it. Also when the Node completely fails, it means your data will be ultimately lost.

That mean’s you can’t utilize the real benefits (high availability, scalability) of Kubernetes this way. Because of data safety, we don’t recommend storing important data (solely) on local disks.

Distributing storage using Longhorn

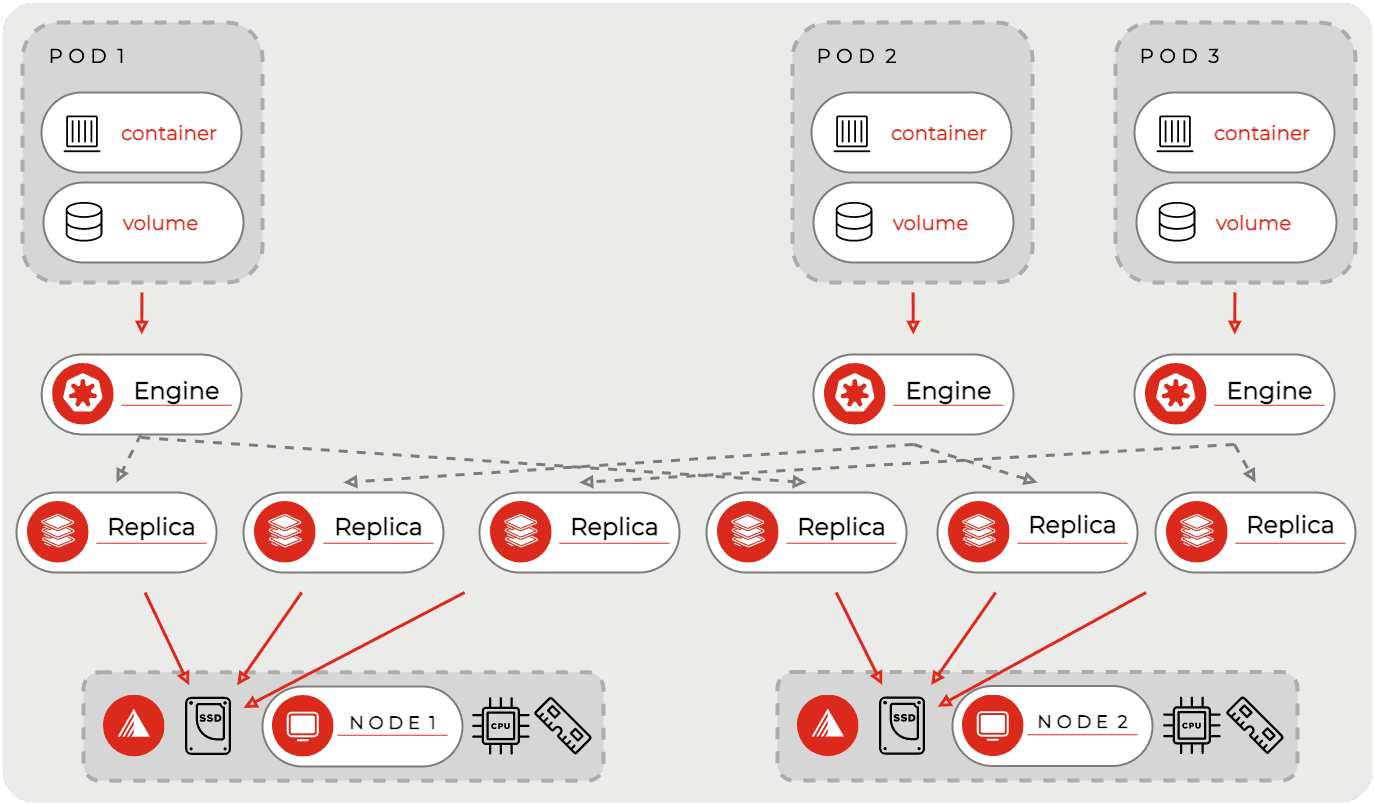

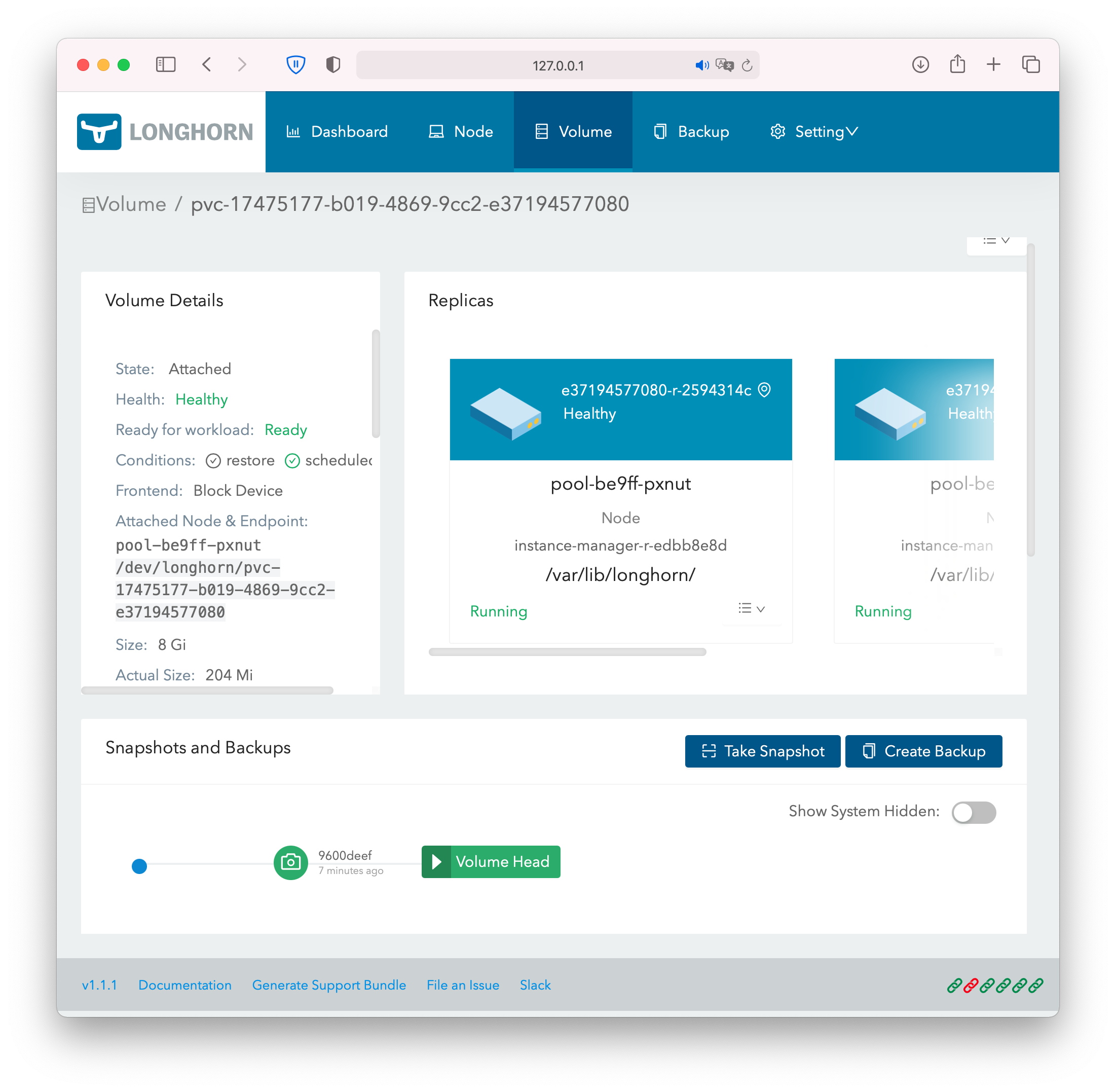

Longhorn is Open-Source Software that you can install inside your SKS cluster. When creating Kubernetes Volumes, you can choose Longhorn (via a storageclass) as backend. It automatically discovers the disks of all nodes and will distribute and replicate your volume across them. Additionally, it supports snapshots, backups to S3 compatible Object Storage like Exoscale SOS, and disaster recovery across clusters.

The picture above shows the basic functionality. Longhorn automatically discovers the local storage of all nodes and utilizes them for Kubernetes Volumes. That means when you create a volume, it will be replicated 3 times (default setting) and distributed across nodes.

Installation of Longhorn

Installation of Longhorn is straightforward and only takes some minutes, you need a SKS Cluster and access to this cluster via kubectl.

Get the link to the current Longhorn manifest from the Longhorn Docs.

Apply the Longhorn manifest this way, replacing VERSION:

kubectl apply -f https://raw.githubusercontent.com/longhorn/longhorn/VERSION/deploy/longhorn.yaml

After some minutes, all Longhorn Pods should be online. You can check this via kubectl get pods -n longhorn-system.

If you have errors, make sure to take a look at kubectl get events -n longhorn-system and kubectl logs PODNAME -n longhorn-system.

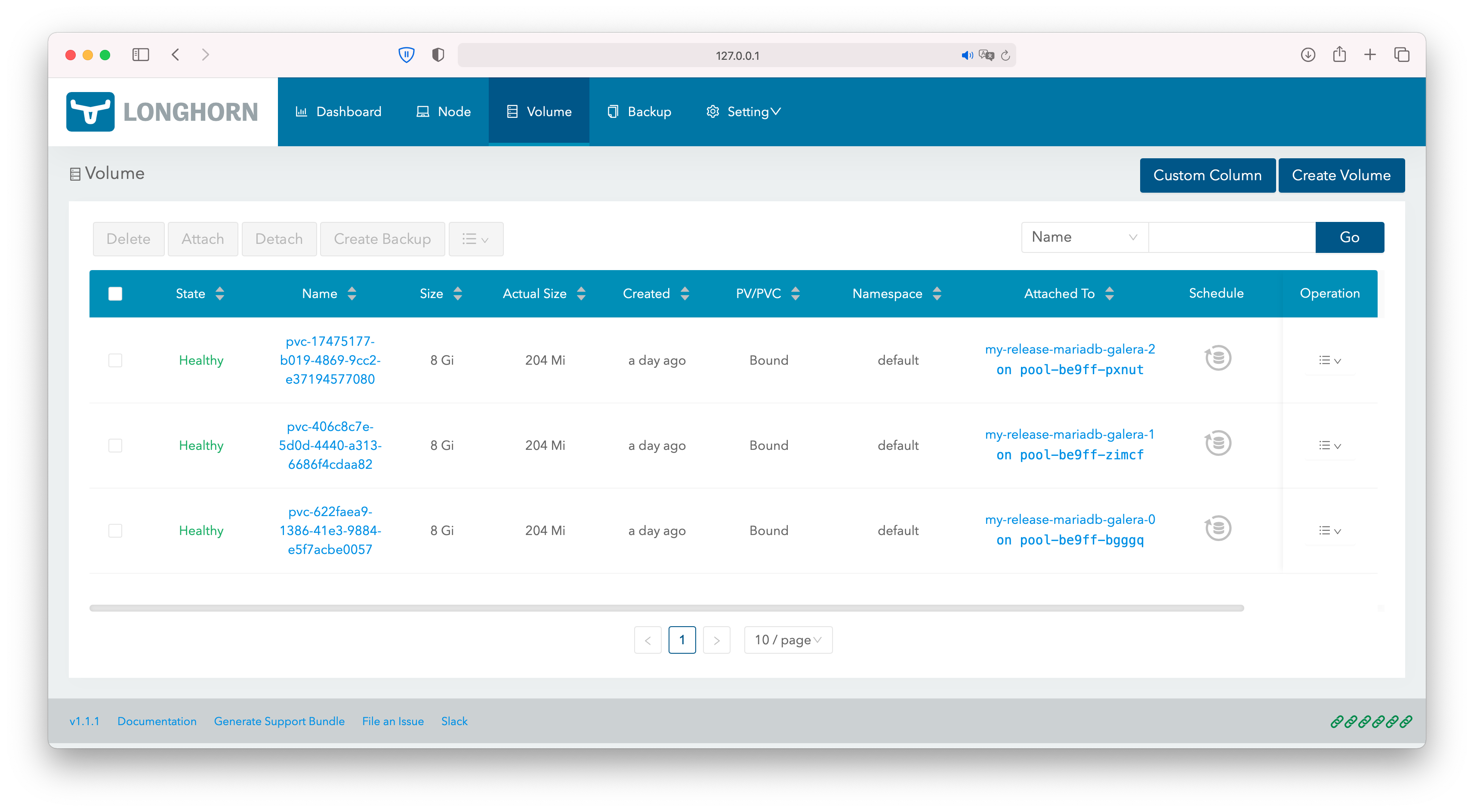

Longhorn comes with a user interface. To access it you can use port-forwarding:

kubectl port-forward deployment/longhorn-ui 7000:8000 -n longhorn-system

Use then this URL to access its dashboard: http://127.0.0.1:7000

In the Longhorn UI you can (excerpt):

- Create Volumes manually

- Backup/Snapshot Volumes

- Evict nodes

- Configure Longhorn

Creating a Persistent Volume for a Pod

We use a simple example to show how you can use Longhorn.

In theory, you can create a volume manually (in the UI) and then mount it in the manifest of a Pod. In practice, this is done differently: You can automate this procedure by using a PVC (Persistent Volume Claim) to create a PV (Persistent Volume), like in the following example:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: example-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: longhorn

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: Pod

metadata:

name: pod-test

spec:

containers:

- name: container-test

image: ubuntu

imagePullPolicy: IfNotPresent

command:

- "sleep"

- "604800"

volumeMounts:

- name: volv

mountPath: /data

volumes:

- name: volv

persistentVolumeClaim:

claimName: example-pvc

The container in the Pod defined below the PVC, attaches the volume by using its name (example-pvc) inside the volumes block.

Even when you delete the Pod or the Pod fails, the volume stays intact. It will be deleted when you explicitly delete the PVC.

The PVC has two access modes:

- ReadWriteOnce

- The Volume can only be attached by one Pod

- ReadWriteMany

- The Volume can be mounted by multiple Pods at the same time

The latter one uses a NFS-layer to achieve the ability to share the volume across Pods. As this comes with a performance penalty, databases-volumes are usually attached using ReadWriteOnce.

The PVC knows to use Longhorn, as we specified longhorn as storageClassName. One can also create a custom storageclass, whereas one can define the number of replicas, the backup-schedule or selectors to only allow Volumes on specific nodes.

Statefulsets

When scaling a database or similar, one often needs a replica paired with one volume. To scale and group multiple Pods of the same kind two concepts exist in Kubernetes - Deployments and Statefulsets.

A Statefulset will enumerate its replicas/Pods, e.g. my-app-0, my-app-1, my-app-2 etc. - my-app-0 will then always be matched with volume-my-app-0.

When using Deployments, its Pods receive a random identifier, that’s why they are not suitable for this use case. Deployments are used when all its Pods should share one ReadWriteMany volume.

The following manifest is an example Statefulset. It will create 3 replicas, and as such 3 individual paired Volumes.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: database

spec:

selector:

matchLabels:

app: database

replicas: 3

serviceName: deployment-test

template:

metadata:

labels:

app: database

spec:

containers:

- name: database-container

image: ubuntu

imagePullPolicy: IfNotPresent

command:

- "sleep"

- "604800"

volumeMounts:

- name: database-volume

mountPath: /data

volumeClaimTemplates:

- metadata:

name: database-volume

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: longhorn

resources:

requests:

storage: 1Gi

We can see the result by showing all Pods and PVCs:

❯ kubectl get pods

NAME READY STATUS RESTARTS AGE

database-0 1/1 Running 0 5m14s

database-1 1/1 Running 0 4m43s

database-2 1/1 Running 0 4m17s

❯ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

database-volume-database-0 Bound pvc-5fc92d99 1Gi RWO longhorn 5m19s

database-volume-database-1 Bound pvc-e797976f 1Gi RWO longhorn 4m48s

database-volume-database-2 Bound pvc-7e4328a0 1Gi RWO longhorn 4m22s

Longhorn and Exoscale SKS in practice: Installing a database cluster

Installing a database is really easy using Helm packages!

First, make sure that Longhorn is the default storage provider in your cluster. To do so, you can simply patch the default storageclass (or create a new storageclass with the respective annotation):

kubectl patch storageclass longhorn -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

To use helm, you need to install the helm CLI on your local computer: Helm Website - https://helm.sh/docs/

In this case, we quickly test setting up a MariaDB Galera Cluster. For that, we use the respective Bitnami helm chart.

Installation is uncomplicated:

helm repo add bitnami https://charts.bitnami.com/bitnami

helm install my-release bitnami/mariadb-galera

This will install the repository of bitnami locally on your computer. Afterwards it will install the mariadb-galera chart, you can replace my-release with an arbitary name.

❯ kubectl get pods

NAME READY STATUS RESTARTS AGE

my-release-mariadb-galera-0 1/1 Running 0 1h

my-release-mariadb-galera-1 1/1 Running 0 1h

my-release-mariadb-galera-2 1/1 Running 0 1h

❯ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-my-release-mariadb-galera-0 Bound pvc-622faea9 8Gi RWO longhorn 1h

data-my-release-mariadb-galera-1 Bound pvc-406c8c7e 8Gi RWO longhorn 1h

data-my-release-mariadb-galera-2 Bound pvc-17475177 8Gi RWO longhorn 1h

We see now that it created 3 replicas with 3 respective Volumes. When installing the chart, it will show us how to get a console in the database:

kubectl run my-release-mariadb-galera-client --rm --tty -i --restart='Never' --namespace default --image docker.io/bitnami/mariadb-galera:10.5.10-debian-10-r26 --command \

-- mysql -h my-release-mariadb-galera -P 3306 -uroot -p$(kubectl get secret --namespace default my-release-mariadb-galera -o jsonpath="{.data.mariadb-root-password}" | base64 --decode) my_database

MariaDB [my_database]>

You can also scale the created Statefulset up and down. When scaling down, the created Volumes won’t be deleted, as the corresponding PVC still exists.

To connect your applications to it, you can use the internal ClusterIP service:

❯ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-release-mariadb-galera ClusterIP 10.100.60.163 <none> 3306/TCP 1h

And that’s how you can create a whole database cluster inside Kubernetes in less than 15 minutes!

Scheduling backups

Backup is an important topic. That’s why you can use backup to S3 in Longhorn. To connect Longhorn with Exoscale SOS (Simple Object storage) we have a tutorial available here.

As soon as you have configured it, you can create backups of your Volumes, which will be saved separately outside your cluster in an Exoscale storage bucket you created - potentially in a different zone. Also when you create a new cluster and apply the same backup target, then Longhorn will discover all your old backups automatically.

To create backups automatically, you have to create a custom storageclass. There you also have the opportunity to change further options like the number of replicas:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: my-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: driver.longhorn.io

parameters:

dataLocality: "disabled"

numberOfReplicas: "3"

staleReplicaTimeout: "30"

fromBackup: ""

recurringJobs: '[

{

"name":"snap",

"task":"snapshot",

"cron":"* */2 * * *",

"retain":1

},

{

"name":"backup",

"task":"backup",

"cron":"* */12, * * *",

"retain":20

}

]'

This sample config will create a snapshot locally every 2 hours and respectively delete the old one. Additionally, every 12 hours volumes using this storageclass will be backed-up to the object storage bucket; 20 backups will be retained. Using the annotation, we set the storageclass to the default one.

Data locality

Longhorn provides great performance. However, in some cases, a Pod can be scheduled on a node, where none of its attached volumes are available. In this case, the Pod will access the Volume over the network, which can lead to less than ideal conditions.

That’s why (ideally for databases) you can turn on Data Locality. Set dataLocality in your storageclass to best-effort and Longhorn will try to always keep a replica on the same node as the attached Pod.

Updating nodes

To update the nodes in the Kubernetes cluster, one replaces nodes one by one. Longhorn is able to copy the data to new nodes.

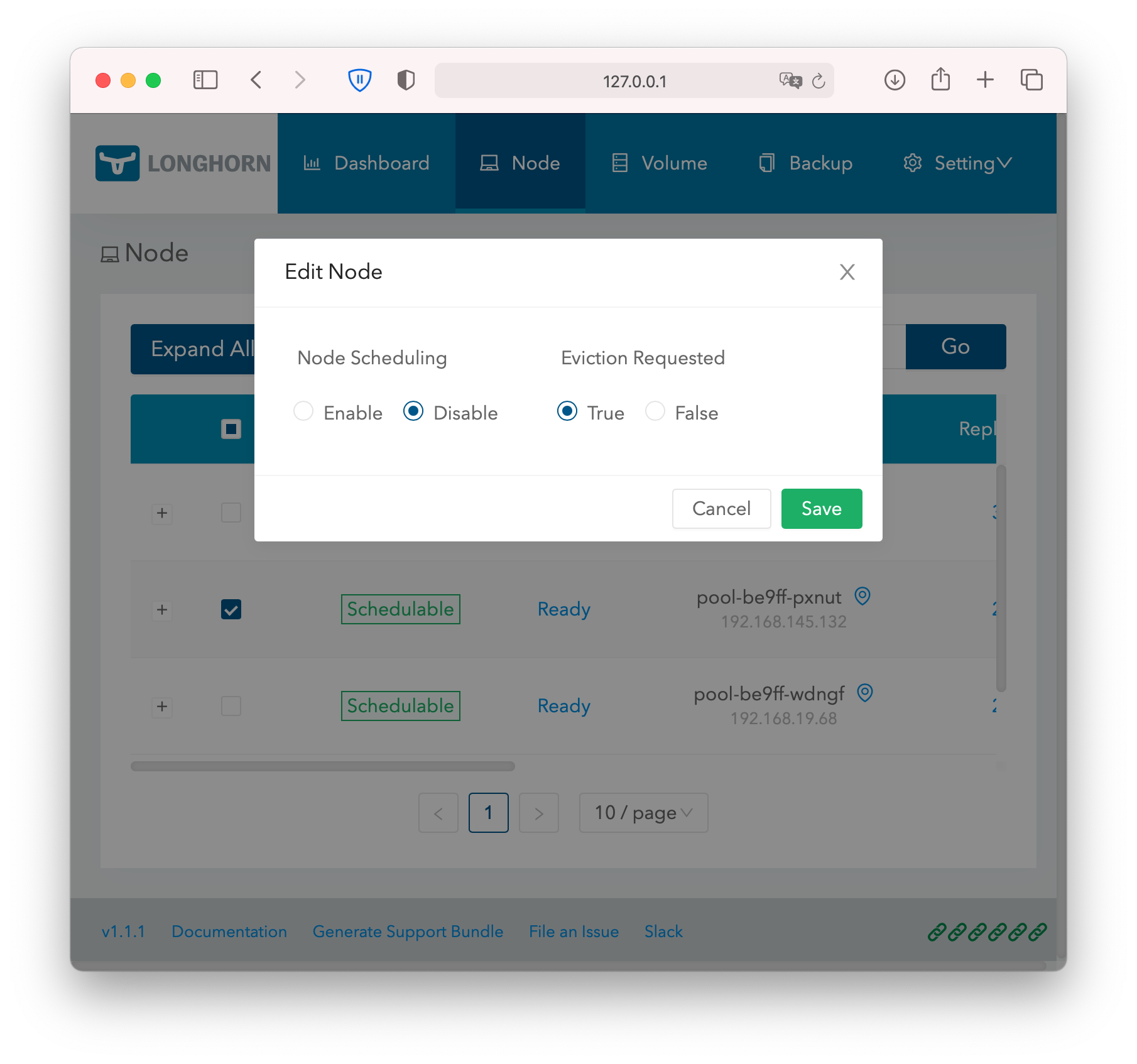

Go to the Longhorn UI and select the node with which you want to start. Then click on Edit Node, disable scheduling, and set Eviction Requested to true.

You will then notice, that the amount of replicas on that node drops to 0. You can then go into the Exoscale web interface or CLI and evict the node from the Exoscale SKS node pool. Type into the console:

exo sks nodepool evict CLUSTERNAME CLUSTERNODEPOOL NODENAME

The node name is the same as shown in Longhorn. Alternatively, you can go into the Exoscale web interface -> SKS -> Your Cluster -> Your Nodepool -> Click on “…” besides the node and then on Evict.

To replace the node, scale the node pool up again with:

exo sks nodepool scale CLUSTERNAME CLUSTERNODEPOOL NODENAME SIZE

As size, use the number of nodes you had before the eviction. To make things quicker, you can also scale up the node pool beforehand. You then can directly evict multiple nodes at once in Longhorn.

Continue with this procedure until all nodes are replaced.