In an industry where so many people want to change the world, it’s fair to say that low cost object storage has done just that. Building a business that requires flexible low-latency storage is now affordable in a way we couldn’t imagine before.

When building the Exoscale public cloud offering, we knew that a simple object storage service, protected by Swiss privacy laws, would be crucial. After looking at the existing object storage software projects, we decided to build our own solution: Pithos.

Pithos is an open source S3-compatability layer for Apache Cassandra, the column database. In other words, it allows you to use standard S3 tools to store objects in your own Cassandra cluster. If this is the first time that you’ve looked at object storage software then you may wonder why Pithos is built on top of a NoSQL database but it’s not all that unusual.

Why Cassandra?

Object storage is, of course, a problem of storing and retrieving data. If you’re doing it right, then it’s a problem of storing and retrieving data at scale: many concurrent connections and open ended potential for total data size.

These are problems that Cassandra has, by and large, already solved. It scales horizontally, so adding more nodes is a relatively simple solution when the data size increases and when the number of concurrent connections grows.

In a Cassandra cluster, each node is equal: there’s no master. Instead, each node owns part of a hash space and the location of individual pieces of data is found by performing a hash on its row key. If this is new to you, think of the Cassandra cluster like a street with each node being a block of apartments. Individual row keys are the apartments within the block. There’s no need to have someone at the end of street to tell you where to find each apartment.

That means that there’s no single point of failure and it allows Cassandra to make multiple active copies of each thing it stores. This is ideal for maintaining availability of the objects to be stored.

Dealing with eventual consistency

The flip-side of availability is eventual consistency. Keeping data available for reads and writes, even in the face of some kind of failure, means that the various copies have the potential to get out of sync.

So, as Cassandra saves three copies of each object you write to Pithos then there is the chance that those copies could diverge. In practice, the circumstances that lead to a problematic divergence are rare in a healthy cluster. The most likely divergence scenario is this:

- Actor 1 writes a new version of an object to Pithos.

- Pithos saves that to Cassandra node A.

- Actor 2 requests the same object from Pithos, which in turn reads it from Cassandra node B.

- Cassandra replicates the new version from node A to nodes B and C.

In that case, Actor 2 reads the slightly older version of the object before the new version has a chance to replicate from node A to node B.

But that’s okay. Eventual consistency is baked into the S3 way of doing things. Only one operation requires atomicity: changing bucket ownership and Pithos handles such operations in a strongly consistent manner. For reads, the S3 protocol allows the possibility of serving an older version of an object but compares checksums to avoid serving stale data.

Diving into Pithos

Pithos itself is written in Clojure and, so, runs alongside Cassandra on the JVM. It’s an Apache 2 licensed project with the code available on GitHub.

It’s actually a relatively small daemon, that lets Cassandra get on with doing most of the work. So, what does Pithos itself do?

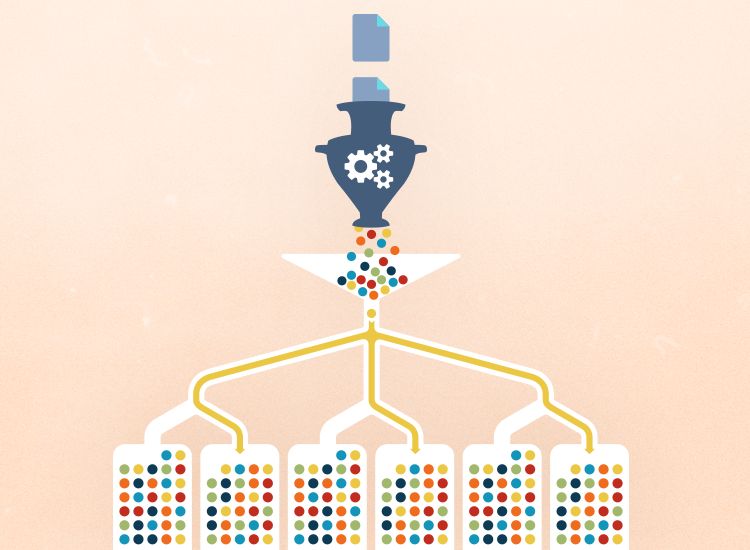

Pithos has three main functions:

- provides an S3 API compatible end-point

- chunks objects into 8 kB pieces then writes them, and accompanying metadata, to Cassandra

- retrieves an object’s chunks from Cassandra and serves them back as the whole object.

So, when you store a file using Pithos, here’s what happens:

- Pithos validates your credentials to access the cluster, destination bucket and object.

- It then chunks your object into those 8 kB pieces.

- It writes the chunks to Cassandra and maintains a map of where they are in the metadata.

Let’s look at the architecture of Pithos.

Architecture

There are four main components to Pithos:

- Keystore: authentication information for each tenant.

- Bucketstore: metadata on each bucket.

- Metastore: object metadata.

- Blobstore: a directory of the individual chunks that make up each object.

Although the keystore is usually a simple config file, each of the stores could be a separate Cassandra cluster meaning that your Pithos cluster can scale massively.

Pithos is also open to swapping out the back-end for any of these stores. For example, if you want the keystore to integrate with a broader corporate authentication service then you can write an integration and then instruct Pithos to use it.

How we use Pithos at Exoscale

At Exoscale we’ve been running our public object store using Pithos since 2014, storing many terrabytes of data, and it has become core to the business of many of our customers.

Pithos is also in use across the world powering private object stores and we’d love to hear from you if you’re also using it. It’s in active development and we absolutely love to receive pull requests!