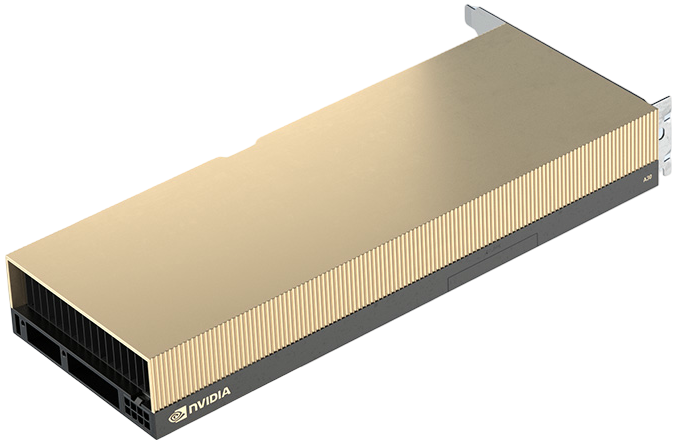

Cost-Effective AI Inference & Training

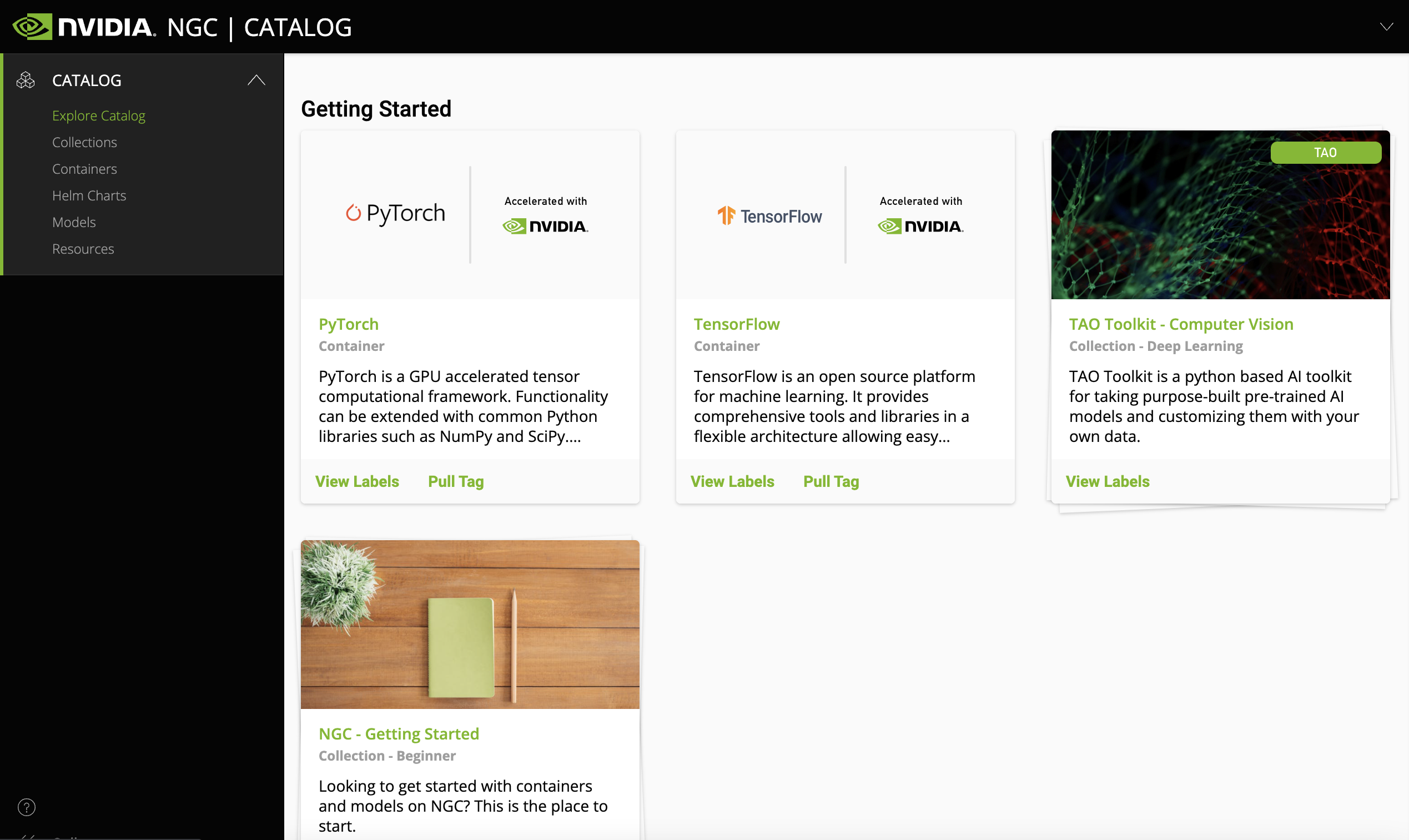

Accelerate AI model inference for applications like conversational AI and natural language processing (NLP). The A30 is suitable for small to medium-scale deep learning training, fine-tuning, and transfer learning, offering a practical option for budget-conscious projects.