One of the biggest challenges with Kubernetes is bringing up a cluster for the first time. There have been several projects that attempt to address this gap including kmachine and minikube. However, both assume you are starting from a blank slate. What if you have your instances provisioned, or have another means of provisioning hosts with configuration management?

This is the problem that the people at Rancher are solving with the Rancher Kubernetes Engine or RKE. At the core is a YAML based configuration file that is used to define the hosts and make-up for your Kubernetes cluster. RKE will do the heavy lifting of installing the required Kubernetes components, and configuring them for your cluster.

What this tutorial will show you is how to quickly set up a few virtual systems that can host our Kubernetes cluster, the bring-up of Kubernetes using RKE, and lastly a sample application hosted inside Kubernetes.

Accompanying configuration files can be found on Github.

Note

This example will bring up a bare minimum cluster with a sample application for experimentation, and in no way should be considered a production ready deployment.

Prerequisites and Setup

For our virtual cluster, we will be using Exoscale as they will allow us to get up and running with minimal effort. There are three binaries that you will need to install to fully utilize this guide. While this guide is written assuming Linux, the binaries are available for Linux, Windows, and MacOS.

- Exoscale CLI - Required to setup the environment and manage our systems

- RKE CLI - Required to provision Kubernetes

- kubectl - Required to manage our new Kubernetes cluster

In addition, you will need an Exoscale account since it will be used to setup the Exoscale CLI.

Configure Exoscale CLI

Once you have your Exoscale account set-up, you need to configure the Exoscale client. Assuming you are in the same directory containing the program you will run:

$ ./exo config

Hi happy Exoscalian, some configuration is required to use exo.

We now need some very important information, find them there.

<https://portal.exoscale.com/account/profile/api>

[+] API Key [none]: EXO························

[+] Secret Key [none]: ······································

[...]

Note

When you go to the API profile page you will see the API Key and Secret. Be sure to copy and paste both at the prompts.

Provisioning the Kubernetes Environment with the Exoscale CLI

Now that we have configured the Exoscale CLI, we need to prepare the Exoscale cloud environment. This will require setting up a firewall rule that will be inherited by the instances that will become the Kubernetes cluster, and an optional step of creating and adding your ssh public key.

Defining the firewall rules

The firewall or security group we will create must have at least three ports exposed: 22 for ssh access, 6443 and 10240 for kubectl and rke to bring up and manage the cluster. Lastly, we need to grant access to the security group so the instances can interact amongst itself.

The first step to this is to create the firewall or security group:

$ ./exo firewall create rke-k8s -d "RKE k8s SG"

┼──────────┼─────────────────┼──────────────────────────────────────┼

│ NAME │ DESCRIPTION │ ID │

┼──────────┼─────────────────┼──────────────────────────────────────┼

│ rke-k8s │ RKE K8S SG │ 01a3b13f-a312-449c-a4ce-4c0c68bda457 │

┼──────────┼─────────────────┼──────────────────────────────────────┼

The next step is to add the rules (command output omitted):

$ ./exo firewall add rke-k8s -p ALL -s rke-k8s

$ ./exo firewall add rke-k8s -p tcp -P 6443 -c 0.0.0.0/0

$ ./exo firewall add rke-k8s -p tcp -P 10240 -c 0.0.0.0/0

$ ./exo firewall add rke-k8s ssh

You can confirm the results by invoking exo firewall show:

$ ./exo firewall show rke-k8s

┼─────────┼────────────────┼──────────┼──────────┼─────────────┼──────────────────────────────────────┼

│ TYPE │ SOURCE │ PROTOCOL │ PORT │ DESCRIPTION │ ID │

┼─────────┼────────────────┼──────────┼──────────┼─────────────┼──────────────────────────────────────┼

│ INGRESS │ CIDR 0.0.0.0/0 │ tcp │ 22 (ssh) │ │ 40d82512-2196-4d94-bc3e-69b259438c57 │

│ │ CIDR 0.0.0.0/0 │ tcp │ 10240 │ │ 12ceea53-3a0f-44af-8d28-3672307029a5 │

│ │ CIDR 0.0.0.0/0 │ tcp │ 6443 │ │ 18aa83f3-f996-4032-87ef-6a06220ce850 │

│ │ SG │ all │ 0 │ │ 7de233ad-e900-42fb-8d93-05631bcf2a70 │

┼─────────┼────────────────┼──────────┼──────────┼─────────────┼──────────────────────────────────────┼

Optional: Creating and adding an ssh key

One of the nice things about the Exoscale CLI is that you can use it to create

an ssh key for each instance you bring up. However there are times where you will

want a single administrative ssh key for the cluster. You can have the Exoscale

CLI create it or use the CLI to import your own key. To do that, you will use

Exoscale’s sshkey subcommand.

If you have a key you want to use:

$ ./exo sshkey upload [keyname] [ssh-public-key-path]

Or if you’d like to create a unique for this cluster:

$ ./exo sshkey create rke-k8s-key

┼─────────────┼─────────────────────────────────────────────────┼

│ NAME │ FINGERPRINT │

┼─────────────┼─────────────────────────────────────────────────┼

│ rke-k8s-key │ 0d:03:46:c6:b2:72:43:dd:dd:04:bc:8c:df:84:f4:d1 │

┼─────────────┼─────────────────────────────────────────────────┼

-----BEGIN RSA PRIVATE KEY-----

MIIC...

-----END RSA PRIVATE KEY-----

$

Save the contents of the RSA PRIVATE KEY component into a file as that will be your sole means of accessing the cluster using that key name. In both cases, we will need to make sure that the ssh-agent daemon is running, and our key is added to it. If you haven’t done so already, run:

$ ssh-add [path-to-private-ssh-key]

Creating your Exoscale instances

At this point we are ready to create the instances. We will utilize the medium sized templates as that will provide enough RAM for both Kubernetes and our sample application to run. The OS Image we will use is Ubuntu-16.04 due to the version of Docker required by RKE to bring up our cluster. Lastly, we use 10g of disk space which will be enough to experiment with.

Note

If you go with a smaller instance size than medium, you will not have enough RAM to bootstrap the Kubernetes cluster.

Step 1: Create instance configuration script

To automate the instance configuration, we will use cloud-init. This is as easy as creating a YAML file to describe our actions, and specifying the file on the Exoscale command line:

#cloud-config

manage_etc_hosts: true

package_update: true

package_upgrade: true

packages:

- curl

runcmd:

- "curl https://releases.rancher.com/install-docker/17.03.sh| bash"

- "usermod -aG docker ubuntu"

- "mkdir /data"

power_state:

mode: reboot

Copy and paste the block of text above into a new file called cloud-init.yml.

Step 2: Create the instances

Next, we are going to create 4 instances:

$ for i in 1 2 3 4; do

./exo vm create rancher-$i \

--cloud-init-file cloud-init.yml \

--service-offering medium \

--template "Ubuntu 16.04 LTS" \

--security-group rke-k8s \

--disk 10

> done

Creating private SSH key

Deploying "rancher-1" ............. success!

What to do now?

1. Connect to the machine

> exo ssh rancher-1

ssh -i "/home/cab/.exoscale/instances/85fc654f-5761-4a02-b501-664ae53c671d/id_rsa" ubuntu@185.19.29.207

2. Put the SSH configuration into ".ssh/config"

> exo ssh rancher-1 --info

Host rancher-1

HostName 185.19.29.207

User ubuntu

IdentityFile /home/cab/.exoscale/instances/85fc654f-5761-4a02-b501-664ae53c671d/id_rsa

Tip of the day:

You're the sole owner of the private key.

Be cautious with it.

Note

If you created or uploaded an SSH Keypair, then you can add the --keypair <common key> argument where common key is the key name you chose to upload.

Also, Save the hostname and IP address. You will need these for the RKE set-up.

After waiting several minutes (about 5 to be safe) you will have four brand new

instances configured with docker and ready to go. A sample configuration will

resemble the following when you run ./exo vm list:

┼───────────┼────────────────┼─────────────────┼─────────┼──────────┼──────────────────────────────────────┼

│ NAME │ SECURITY GROUP │ IP ADDRESS │ STATUS │ ZONE │ ID │

┼───────────┼────────────────┼─────────────────┼─────────┼──────────┼──────────────────────────────────────┼

│ rancher-4 │ rke-k8s │ 159.100.240.102 │ Running │ ch-gva-2 │ acb53efb-95d1-48e7-ac26-aaa9b35c305f │

│ rancher-3 │ rke-k8s │ 159.100.240.9 │ Running │ ch-gva-2 │ 6b7707bd-9905-4547-a7d4-3fd3fdd83ac0 │

│ rancher-2 │ rke-k8s │ 185.19.30.203 │ Running │ ch-gva-2 │ c99168a0-46db-4f75-bd0b-68704d1c7f79 │

│ rancher-1 │ rke-k8s │ 185.19.30.83 │ Running │ ch-gva-2 │ 50605a5d-b5b6-481c-bb34-1f7ee9e1bde8 │

┼───────────┼────────────────┼─────────────────┼─────────┼──────────┼──────────────────────────────────────┼

RKE and Kubernetes

The Rancher Kubernetes Engine command is used to bring up, tear down, and

backup the configuration for a Kubernetes cluster. The core consists of

a configuration file that has the name of cluster.yml. While RKE

supports the creation of this configuration file with the command rke config,

it can be tedious to go through the prompts. Instead, we will pre-create

the config file.

The file below is a sample file that can be saved and modified as cluster.yml:

---

ssh_key_path: [path to ssh private key]

ssh_agent_auth: true

cluster_name: rke-k8s

nodes:

- address: [ip address of rancher-1]

name: rancher-1

user: ubuntu

role:

- controlplane

- etcd

- worker

- address: [ip address of rancher-2]

name: rancher-2

user: ubuntu

role:

- worker

- address: [ip address of rancher-3]

name: rancher-3

user: ubuntu

role:

- worker

- address: [ip address of rancher-4]

name: rancher-4

user: ubuntu

role:

- worker

Things you will need to modify:

-

ssh_key_path: If you uploaded or created a public ssh key, then the path should be changed to reflect your private key location. Otherwise, you will need to move the

ssh_key_pathline to be inside each node entry and change the path to match the key generated for each instance that was created. -

address These should be changed to the IP addresses you saved from the previous step.

-

cluster_name This should match your firewall/security group name.

Once you have saved your updated cluster.yml, $ ./rke up is all you need to

bring up the Kubernetes cluster. There will be a flurry of status updates as the

docker containers for the various Kubernetes components are downloaded into each

node, installed, and configured.

If everything goes well, then you will see the following when RKE finishes:

$ rke up

...

INFO[0099] Finished building Kubernetes cluster successfully

Congratulations, you have just brought up a Kubernetes cluster!

Configuring and using kubectl

One thing you will see that RKE created is the Kubernetes configuration file

kube_config_cluster.yml which is used by kubectl to communicate with the cluster.

To make running kubctl easier going forward, you will want to set the environment

variable KUBECONFIG so you don’t need to pass the config parameter each time:

export KUBECONFIG=/path/to/kube_config_cluster.yml

Here are a few sample status commands. The first command will give

you a listing of all registered nodes, their roles as defined from the

cluster.yml file above, and the Kubernetes version each node is running running.

$ ./kubectl get nodes

NAME STATUS ROLES AGE VERSION

159.100.240.102 Ready worker 3m v1.11.1

159.100.240.9 Ready worker 3m v1.11.1

185.19.30.203 Ready worker 3m v1.11.1

185.19.30.83 Ready controlplane,etcd,worker 3m v1.11.1

The second command is used to give you the cluster status.

$ ./kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

Note

For more information about RKE and the cluster configuration file, you can visit Rancher’s documentation page.

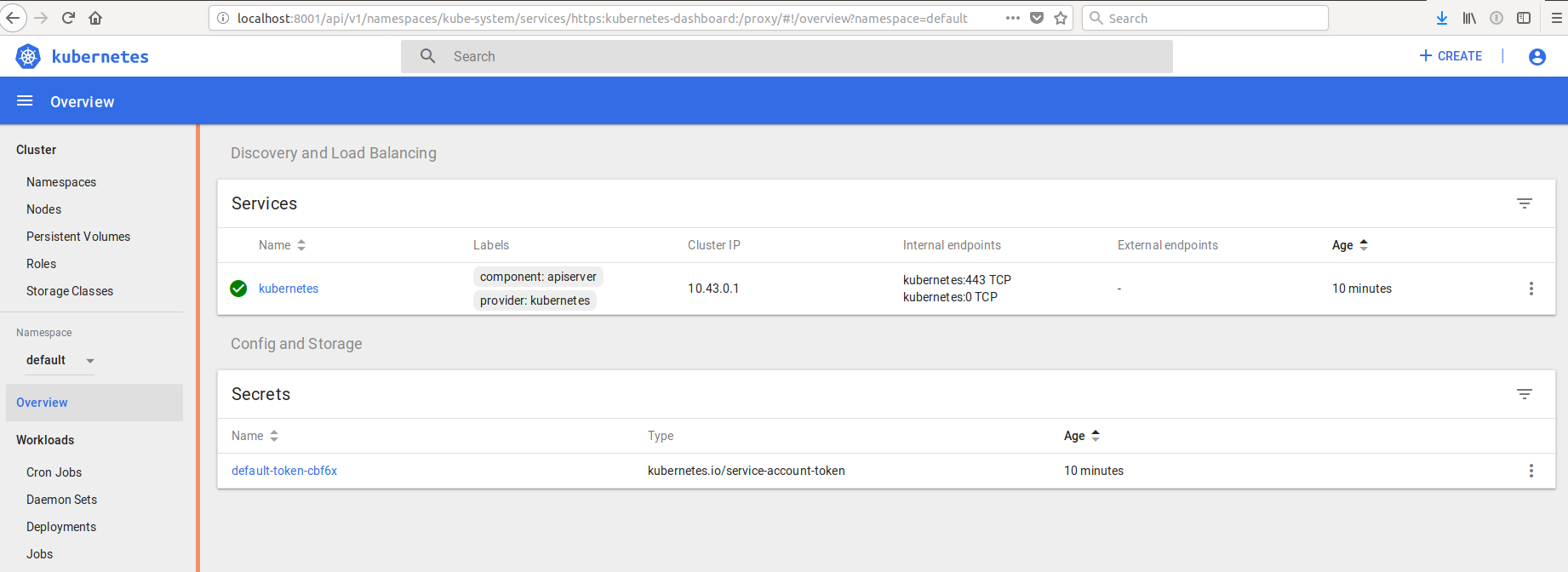

Optional: Installing the Kubernetes Dashboard

To make it easier to collect status information on the Kubernetes cluster, we will install the Kubernetes dashboard. To install the dashboard, you will run:

$ ./kubectl create -f https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

At this point, the dashboard is installed and running, but the only way to access it is from inside the Kubernetes cluster. To expose the dashboard port onto your workstation so that you can interact with it, you will need to proxy the port by running:

$ ./kubectl proxy

Now you can now visit the dashboard at: http://localhost:8001.

However, to be able to make full use of the dashboard, you will need to

authenticate your session. This will require a token from a specific

subsystem utilizing a set of secrets that were generated at the time we ran

rke up. The following command will extract the correct token that you can use

to authenticate against for the dashboard:

$ ./kubectl -n kube-system describe secrets \

`./kubectl -n kube-system get secrets |awk '/clusterrole-aggregation-controller/ {print $1}'` \

|awk '/token:/ {print $2}'

Copy and paste the long string that is returned into the authentication prompt on the dashboard webpage, and explore the details of your cluster.

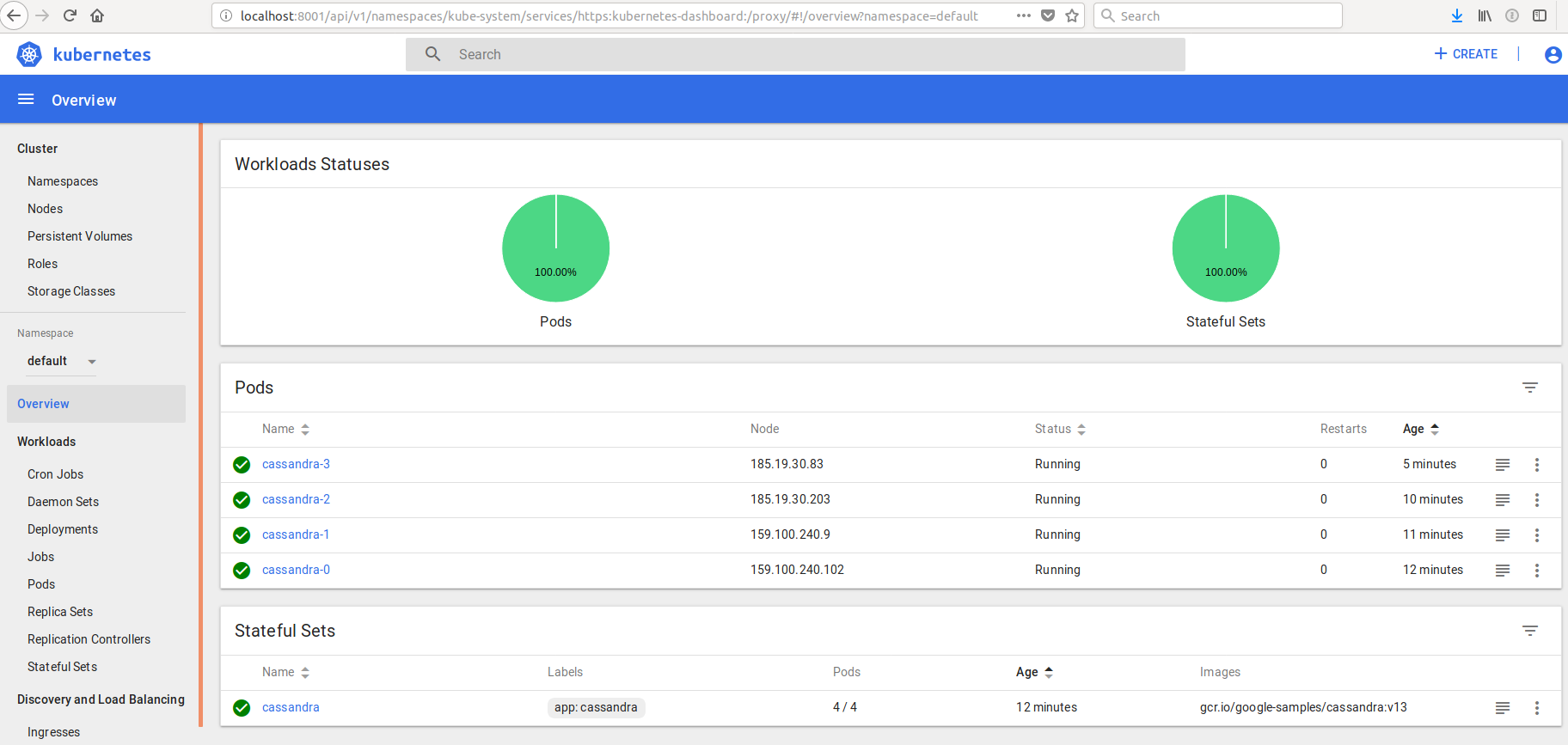

Adding Cassandra to Kubernetes

Now for the fun part. We are going to bring up Cassandra. This will be a simple cluster that will use the local disks for storage. This will give us something to play with when it comes to installing a service, and seeing what happens inside Kubernetes.

To install Cassandra, we need to specify a service configuration that will be exposed by Kubernetes, and an application definition file that specifies things like networking, storage configuration, number of replicas, etc.

Step 1: Cassandra Service File

First, we will start with the services file:

apiVersion: v1

kind: Service

metadata:

labels:

app: cassandra

name: cassandra

spec:

clusterIP: None

ports:

- port: 9042

selector:

app: cassandra

Copy and save the services file as cassandra-services.yaml, and load it:

./kubectl create -f ./cassandra-service.yml

You should see it load successfully, and you can verify using kubectl:

$ ./kubectl get svc cassandra

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cassandra ClusterIP None <none> 9042/TCP 46s

Note

For more details on Service configurations, you can read more about it in the Kubernetes Service Networking Guide.

Cassandra StatefulSet

The StatefulSet is a type of Kubernetes workload where the application is expected to persist some kind of state such as our Cassandra example.

We will download the configuration from the article’s Github repo, and apply it:

$ ./kubectl create -f https://raw.githubusercontent.com/exoscale-labs/Using-RKE-to-Deploy-a-Kubernetes-Cluster-on-Exoscale/master/cassandra-statefulset.yaml

statefulset.apps/cassandra created

storageclass.storage.k8s.io/fast created

You can check the state of the StatefulSet we are loading:

$ ./kubectl get statefulset

NAME DESIRED CURRENT AGE

cassandra 3 3 4m

You can even interact with Cassandra inside its pod such as verifying that Cassandra is up:

$ ./kubectl exec -it cassandra-0 -- nodetool status

Datacenter: DC1-K8Demo

======================

Status=Up/Down

|/ State=Normal/Leaving/Joining/Moving

-- Address Load Tokens Owns (effective) Host ID Rack

UN 10.42.2.2 104.55 KiB 32 59.1% ac30ba66-bd59-4c8d-ab7b-525daeb85904 Rack1-K8Demo

UN 10.42.1.3 84.81 KiB 32 75.0% 92469b85-eeae-434f-a27d-aa003531cff7 Rack1-K8Demo

UN 10.42.3.3 70.88 KiB 32 65.9% 218a69d8-52f2-4086-892d-f2c3c56b05ae Rack1-K8Demo

Now suppose we want to scale up the number of replicas from 3, to 4? To do that, you will run:

$ ./kubectl edit statefulset cassandra

This will open up your default text editor. Scroll down to the replica line,

change the value 3 to 4, save and exit. You should see the following with your

next invocation of kubectl:

$ ./kubectl get statefulset

NAME DESIRED CURRENT AGE

cassandra 4 4 10m

$ ./kubectl exec -it cassandra-0 -- nodetool status

Datacenter: DC1-K8Demo

======================

Status=Up/Down

|/ State=Normal/Leaving/Joining/Moving

-- Address Load Tokens Owns (effective) Host ID Rack

UN 10.42.2.2 104.55 KiB 32 51.0% ac30ba66-bd59-4c8d-ab7b-525daeb85904 Rack1-K8Demo

UN 10.42.1.3 84.81 KiB 32 56.7% 92469b85-eeae-434f-a27d-aa003531cff7 Rack1-K8Demo

UN 10.42.3.3 70.88 KiB 32 47.2% 218a69d8-52f2-4086-892d-f2c3c56b05ae Rack1-K8Demo

UN 10.42.0.6 65.86 KiB 32 45.2% 275a5bca-94f4-439d-900f-4d614ba331ee Rack1-K8Demo

Final Note

There is one final note about cluster scaling and using the StatefulSet workload. Kubernetes makes it easy to scale your cluster up to account for load, however to ensure data gets preserved, Kubernetes will keep all data in place when you scale the number of nodes down. What this means is that you will be responsible for ensuring proper backups are made and everything is deleted before you can consider the application cleaned up.